Dynamic Resource Allocation Using a DRL Method in 5G Network

Keywords:

5G Cellular Network, Network Slicing, DRL, Deep Q- Learning NetworkAbstract

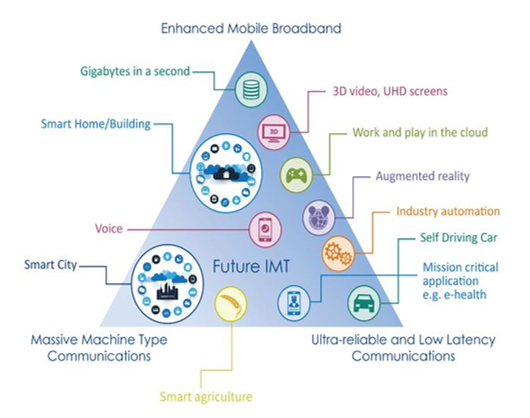

Wireless communication has become increasingly popular in the past two decades. The purpose of 5G is to provide higher bandwidth, lower latency, greater capacity and enhanced QoS (quality of service) than 4G. The 5G cellular network combines two technologies, SDN (software-defined network) and NFV (network function virtualization), for advanced management of the Network. This paper presents the main concepts related to RA (resource allocation) in a 5G network, which is the idea of dividing the network into multiple independent networks, each satisfying specific requirements while offering superior QoS. 5G network services can be classified into three verticals – (i). enhanced-Mobile Broadband (e-MBB), (ii). ultra-Reliable and Low Latency Communication (u-RLLC), and (iii). Massive-Machine Type Communications (m-MTC). Users require well-organized resource allocation and management. In this work, we implement a resource allocation module with Deep Reinforcement Learning (DRL) to estimate the Q-value function that utilizes a deep neural network, which learns from previous experience and adjusts to changing environments. The outcomes demonstrate that the implemented simulation reaches better in resource allocation compared to previous models, leading to lower latency and better throughput.

Downloads

References

Islam, M., & Jin, S. (2019). An overview research on wireless communication network. Networks, 5(1), 19-28.

Alsenwi, M., Kim, K., & Hong, C. S. (2019). Radio resource allocation in 5g new radio: A neural networks based approach. arXiv preprint arXiv:1911.05294.

Eze, K. G., Sadiku, M. N., & Musa, S. M. (2018). 5G wireless technology: A primer. International Journal of Scientific Engineering and Technology, 7(7), 62-64.

Albreem, M. A. (2015, April). 5G wireless communication systems: Vision and challenges. In 2015 International Conference on Computer, Communications, and Control Technology (I4CT) (pp. 493-497). IEEE.

Li, X., Samaka, M., Chan, H. A., Bhamare, D., Gupta, L., Guo, C., & Jain, R. (2017). Network slicing for 5G: Challenges and opportunities. IEEE Internet Computing, 21(5), 20-27.

Shen, S., Zhang, T., Mao, S., & Chang, G. K. (2021). DRL-based channel and latency aware radio resource allocation for 5G service-oriented RoF-mmWave RAN. Journal of Lightwave Technology, 39(18), 5706-5714.

Qiu, Q., Liu, S., Xu, S., & Yu, S. (2020). Study on security and privacy in 5g-enabled applications. Wireless Communications and Mobile Computing, 2020, 1-15.

Barakabitze, A. A., Ahmad, A., Mijumbi, R., & Hines, A. (2020). 5G network slicing using SDN and NFV: A survey of taxonomy, architectures and future challenges. Computer Networks, 167,106984.

Wijethilaka, S., & Liyanage, M. (2021). Survey on network slicing for Internet of Things realization in 5G networks. IEEE Communications Surveys & Tutorials, 23(2), 957-994.

Kim, Y., Kim, S., & Lim, H. (2019). Reinforcement learning based resource management for network slicing. Applied Sciences, 9(11), 2361.

Li, X., Samaka, M., Chan, H. A., Bhamare, D., Gupta, L., Guo, C., & Jain, R. (2017). Network slicing for 5G: Challenges and opportunities. IEEE Internet Computing, 21(5), 20-27.

Ssengonzi, C., Kogeda, O. P., & Olwal, T. O. (2022). A survey of deep reinforcement learning application in 5G and beyond network slicing and virtualization. Array, 100142.

Xiong, Z., Zhang, Y., Niyato, D., Deng, R., Wang, P., & Wang, L. C. (2019). Deep reinforcement learning for mobile 5G and beyond: Fundamentals, applications, and challenges. IEEE Vehicular Technology Magazine, 14(2), 44-52.

Li, R., Zhao, Z., Sun, Q., Chih-Lin, I., Yang, C., Chen, X., ... & Zhang, H. (2018). Deep reinforcement learning for resource management in network slicing. IEEE Access, 6, 74429-74441.

Nguyen, H. T., Nguyen, M. T., Do, H. T., Hua, H. T., & Nguyen, C. V. (2021). DRL-based intelligent resource allocation for diverse QoS in 5G and toward 6G vehicular networks: a comprehensive survey. Wireless Communications and Mobile Computing, 2021, 1-21.

Kamal, M. A., Raza, H. W., Alam, M. M., Su’ud, M. M., & Sajak, A. B. A. B. (2021). Resource allocation schemes for 5G network: A systematic review. Sensors, 21(19), 6588.

Popovski, P., Trillingsgaard, K. F., Simeone, O., & Durisi, G. (2018). 5G wireless network slicing for eMBB, URLLC, and mMTC: A communication-theoretic view. Ieee Access, 6, 55765-55779.

Suh, K., Kim, S., Ahn, Y., Kim, S., Ju, H., & Shim, B. (2022). Deep reinforcement learning-based network slicing for beyond 5G. IEEE Access, 10, 7384-7395.

Liu, Q., Han, T., Zhang, N., & Wang, Y. (2020, December). DeepSlicing: Deep reinforcement learning assisted resource allocation for network slicing. In GLOBECOM 2020-2020 IEEE Global Communications Conference (pp. 1-6). IEEE.

Sewak, M. (2019). Deep Q Network (DQN), Double DQN, and Dueling DQN. In: Deep Reinforcement Learning. Springer, Singapore. https://doi.org/10.1007/978-981-13-8285-7_8.

Khoramnejad, F., Joda, R., Sediq, A. B., Abou-Zeid, H., Atawia, R., Boudreau, G., & Erol-Kantarci, M. (2022). Delay-Aware and Energy-Efficient Carrier Aggregation in 5G Using Double Deep Q-Networks. IEEE Transactions on Communications, 70(10), 6615-6629.

Van Hasselt, H., Guez, A., & Silver, D. (2016, March). Deep reinforcement learning with double q-learning. In Proceedings of the AAAI conference on artificial intelligence (Vol. 30, No. 1).

Ye, H., & Li, G. Y. (2018, May). Deep reinforcement learning for resource allocation in V2V communications. In 2018 IEEE International Conference on Communications (ICC) (pp. 1-6). IEEE.

Wang, Z., Wei, Y., Yu, F. R., & Han, Z. (2020, December). Utility optimization for resource allocation in edge network slicing using DRL. In GLOBECOM 2020-2020 IEEE Global Communications Conference (pp. 1-6). IEEE.

Dogra, A., Jha, R. K., & Jain, S. (2020). A survey on beyond 5G network with the advent of 6G: Architecture and emerging technologies. IEEE Access, 9, 67512-67547.

Kumar, N., & Ahmad, A. (2023). Quality of service‐aware adaptive radio resource management based on deep federated Q‐learning for multi‐access edge computing in beyond 5G cloud‐radio access network. Transactions on Emerging Telecommunications Technologies, e4762.

Downloads

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.