Intelligent Conversational Agents Based Custom Question Answering System

Keywords:

Tortoise TTS, Custom Question Answering, VOCA, FLAME, GANAbstract

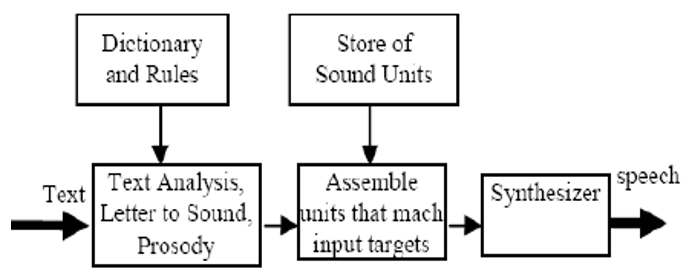

Intelligent conversational agents have become increasingly popular in recent years, and they have numerous applications in education, customer service, and entertainment. In this paper, we present an intelligent conversational agent which will act like a historical personality. The goal of this research is to create a system that can provide accurate and engaging information about historical figures in a conversational manner. The digital characters respond to questions by providing audio responses and changing their facial expressions through lip-syncing. The model utilizes the Azure custom answering service to generate question-answer pairs, which are used to train the model to provide accurate answers to questions. The voice of the digital characters is cloned using the Tortoise TTS model of the TortoiseAI team. The audio responses generated by the voice cloning model are then utilized in conjunction with the VOCA and FLAME models and utilize an end-to-end speech-driven facial animation system based on a temporal GAN. The temporal GAN relies on a generator and three discriminators (frame, sequence, and synchronization discriminators) that drive the generation of an auto-lip-sync talking head using only a still 2D image of a person and a voice clip as input. for lip-syncing and facial expressions of the digital characters. The model's subjective listening test evaluated the lip-syncing and facial expressions, demonstrating that the digital characters produced believable and accurate responses. The proposed system allows users to add new characters and is suitable for educational deployment. User study results demonstrate high accuracy and engaging user experience, suggesting our approach is a promising advancement in educational conversational agents.

Downloads

References

Khan, M.Z., Jabeen, S., Khan, M., U., 2021. "A Realistic Image Generation of Face From Text Description Using the Fully Trained Generative Adversarial Networks, in IEEE Access, vol. 9, pp. 1250-1260, 2021, doi: 10.1109/ACCESS.2020.3015656.

M. A. Kia, A. Garifullina, M. Kern, J. Chamberlain and S. Jameel, 2022. Adaptable Closed-Domain Question Answering Using Contextualized CNN-Attention Models and Question Expansion in IEEE Access, vol. 10, pp. 45080-45092, 2022, doi: 10.1109/ACCESS.2022.3170466.

Sakhare, N., Shaik, I, Kagad, S., Malekar, H., Dalal, M. 2020. Stock market prediction using sentiment analysis International Journal of Advanced Science and Technology, Vol. 4, issue 3, 2020

Egor, Z., Aliaksandra, S., Egor, B., and Victor L., 2019. Few-shot adversarial learning of realistic neural talking head models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019

Sakhare, N., Shaik, I., 2022. Technical Analysis Based Prediction of Stock Market Trading Strategies Using Deep Learning and Machine Learning Algorithms, International Journal of Intelligent Systems and Applications in Engineering, 2022, 10(3), pp. 411–421.

Sungjoo H., Martin K., Beomsu K., Seokjun S., and Dongyoung K. 2020. Few-shot face reenactment preserving identity of unseen targets. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020.

Sakhare, N., Joshi, S., , “Criminal Identification System Based On Data Mining” 3rd ICRTET, ISBN, Issue 978-93, Pages 5107-220, 2015

Zhenglin G., Chen C., and Sergey T., 2019, 3d guided fine-grained face manipulation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019.

Wen L., Zhixin P., Jie M., Wenhan L., Lin M, and Shenghua Gao. 2019. Liquid warping gan: A unified framework for human motion imitation, appearance transfer and novel view synthesis. In Proceedings of the IEEE International Conference on Computer Vision, 2019.

Justus T., Michael Z., Marc S., Christian T., and Matthias Ni., 2019. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016.

Sakhare, N., Joshi, S., 2014. Classification of criminal data using J48-Decision Tree algorithm. IFRSA International Journal of Data Warehousing & Mining, Vol. 4, 2014.

Fan, B., Wang, L., Soong, F., K. and Xie, L. 2015. Photo-real talking head with deep bidirectional LSTM. In International Conference on Acoustics, Speech and Signal Processing, pages 4884–4888, 2015

Chen, C., Qiming, H., and Kun, Z., 2014. Displaced dynamic expression regression for real-time facial tracking and animation. ACM Trans. Graph. 33, 4, Article 43 (July 2014), 10 pages. https://doi.org/10.1145/2601097.2601204

Sakhare, N., Shaik, I.,Saha,S. 2023 Prediction of stock market movement via technical analysis of stock data stored on blockchain using novel History Bits based machine learning algorithm. IET Soft.1–12(2023). https://doi.org/10.1049/sfw2.1209212

Aliaksandr S., Stéphane L., Sergey T., Elisa R., and Nicu S. 2019. First order motion model for image animation. In Proceedings of the Neural Information Processing Systems Conference, 2019

Shengju Q., Kwan-Yee L., Wayne W., Yangxiaokang L., Quan W., Fumin S., Chen Q., and Ran H. 2019. Make a face: Towards arbitrary high fidelity face manipulation. In Proceedings of the IEEE International Conference on Computer Vision, 2019.

Sakhare, N. , Verma, D. , Kolekar, V. , Shelke, A. , Dixit, A. and Meshram, N. 2023. E-commerce Product Price Monitoring and Comparison using Sentiment Analysis. International Journal on Recent and Innovation Trends in Computing and Communication. 11, 5 (May 2023), 404–411. DOI:https://doi.org/10.17762/ijritcc.v11i5.6693.

Yuval N., Yosi K., and Tal H., 2019. Subject agnostic face swapping and reenactment. In Proceedings of the IEEE International Conference on Computer Vision, 2019.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Nitin Sakhare, Jyoti Bangare, Deepika Ajalkar, Gajanan Walunjkar, Madhuri Borawake, Anup Ingle

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

All papers should be submitted electronically. All submitted manuscripts must be original work that is not under submission at another journal or under consideration for publication in another form, such as a monograph or chapter of a book. Authors of submitted papers are obligated not to submit their paper for publication elsewhere until an editorial decision is rendered on their submission. Further, authors of accepted papers are prohibited from publishing the results in other publications that appear before the paper is published in the Journal unless they receive approval for doing so from the Editor-In-Chief.

IJISAE open access articles are licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. This license lets the audience to give appropriate credit, provide a link to the license, and indicate if changes were made and if they remix, transform, or build upon the material, they must distribute contributions under the same license as the original.